helm源码分析

本文主要包含了helm服务器的启动过程和install命令的整个执行过程。

Project link:http://helm.sh

本文参考自:http://blog.csdn.net/yan234280533/article/category/6389651/1

grpc文档:http://doc.oschina.net/grpc?t=57966

chart模板:https://github.com/kubernetes/charts/tree/master/stable

helm作为Kubernetes一个包管理引擎,基于chart的概念,有效的对Kubernetes上应用的部署进行了优化。Chart通过模板引擎,下方对接Kubernetes中services模型,上端打造包管理仓库。最后的使得Kubernetes中,对应用的部署能够达到像使用apt-get和yum一样简单易用。

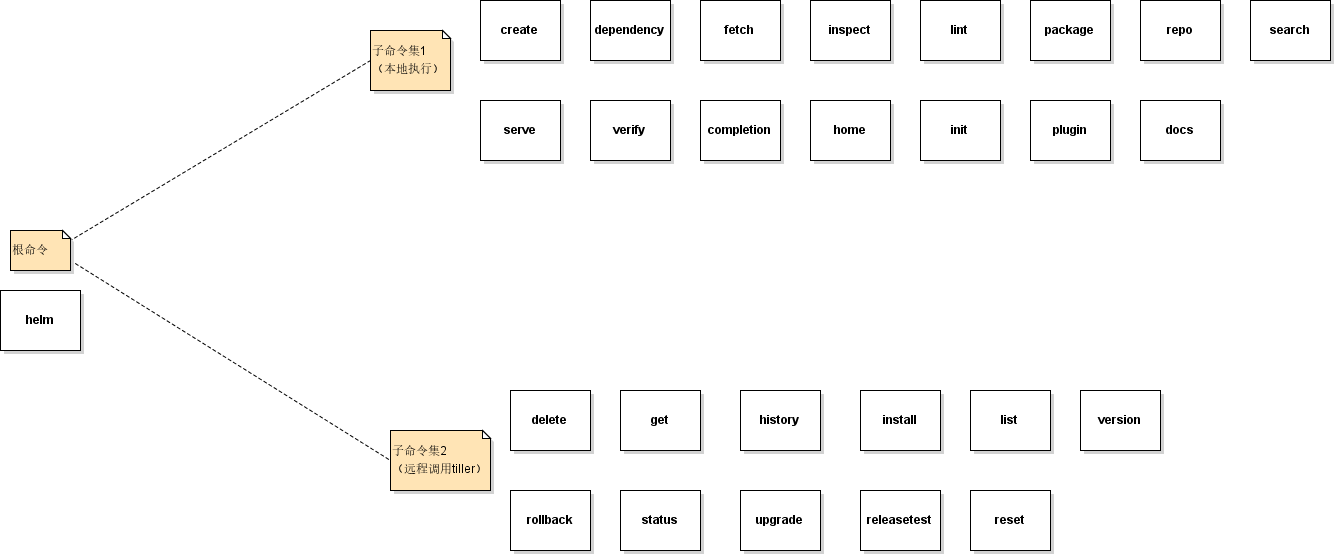

Helm目录结构和Kubernetes中其他组件目录结构类似,模块的起始位置在cmd文件夹中,实际的工作代码在pkg包中,helm包含两个组件helm客户端和tiller服务端。

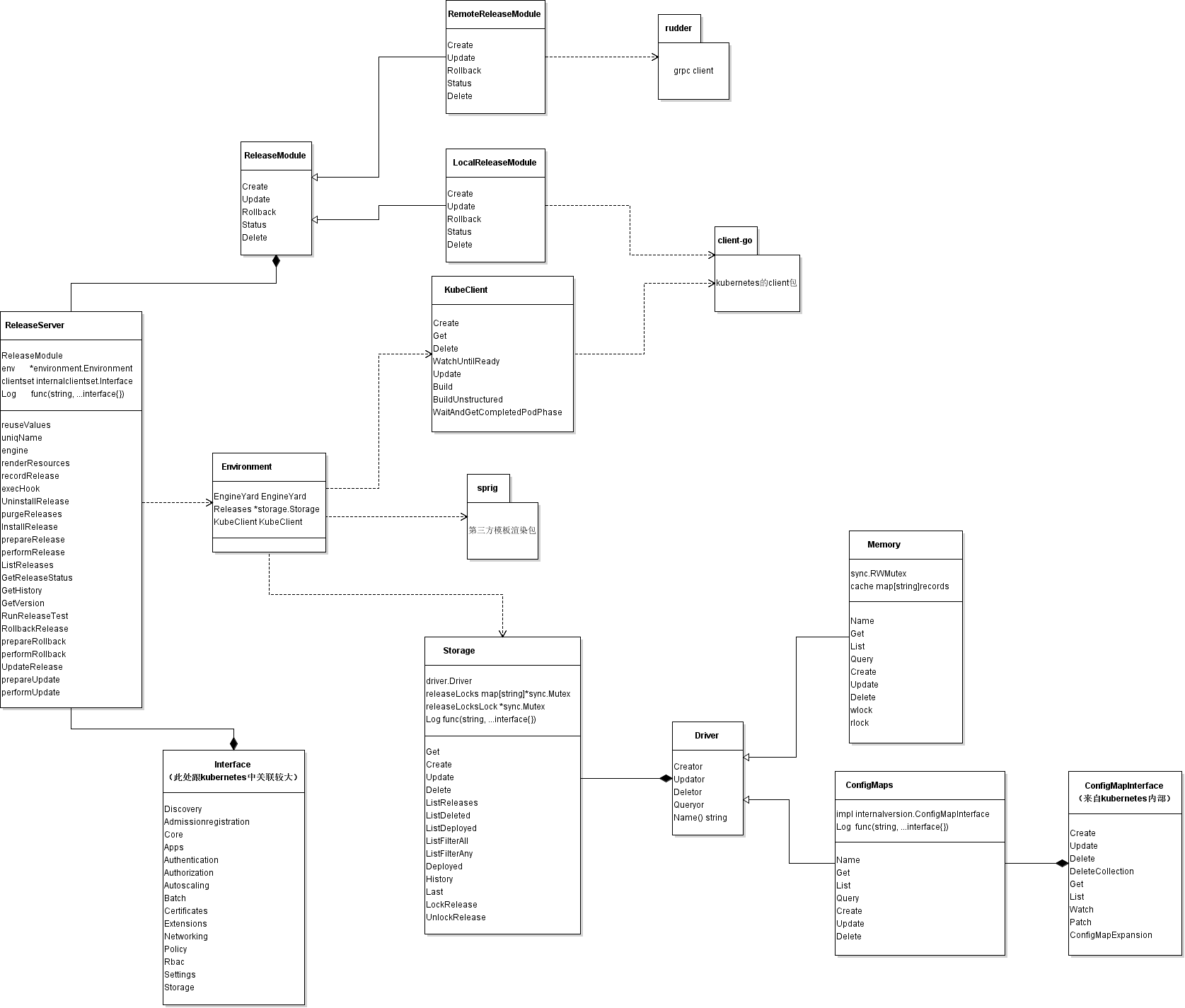

helm客户端主要是将对应分命令进行解析,然后使用gRPC协议发送到服务端处理。Tiller主要通过gRPC协议从客户端接收对应的命令,然后通过模板解析,生成对应的yaml文件,再调用kubeClient创建服务。同时,将生成的yaml文件保存到后端存储中,用于版本升级和回退。

helm客户端结构

tiller类图

简单分析(以详解为准):

helm执行流程:

1、初始化全局变量,创建命令行工具cobra.Command对象,添加子命令及其相关描述。

2、install命令详解:

2.1 命令通过cobra.Command初始化,解析并配置参数和执行函数。

2.2 真正的执行实在run函数。

2.3 处理和配置installCmd结构体中的内容,并以此来初始化一个client对象。

2.4 连接tiller服务端远程调用InstallRelease函数。

2.5 打印response相关信息,再调用以此tiller的ReleaseStatus函数查询Release的Status确认安装完毕,并打印。

tiller执行流程:

解析参数。

获取kubectl内部用的 client的集合。

配置存储类型(memory OR configmap),设置到env变量中。

启动kube client。

如果需要,配置grpc通信的TLS。

启动grpc服务器,即tiller服务器。

根据参数监听端口。

(协程)将tiller的所有服务注册进grpc server,开始服务。

(协程)开启prometheus监听grpc。

select监听上述协程的error channel。

InstallRelease:

prepareRelease:

命名(是否重名),确定API、kubernetes、tiller(proto)版本保存在caps中,时间,配置ReleaseOptions保存在options中。

将request的chart、values和上面生成的options、caps合并到一个map中。

根据chart、上面的map和API版本渲染模板,并根据此结果生成一个Release对象。

验证和更新manifest。

performRelease:

根据Release构建response。

执行hook(PreInstall+install)(类似于数据库中的事件)。

记录Release。

执行hook(PostInstall)。

详解

helm客户端

init()关闭gRPC的log输出到控制台。

func main() {

cmd := newRootCmd()

if err := cmd.Execute(); err != nil {

os.Exit(1)

}

}

初始化命令行,执行命令。

func newRootCmd() *cobra.Command {

cmd := &cobra.Command{

Use: "helm",

Short: "The Helm package manager for Kubernetes.",

Long: globalUsage,

SilenceUsage: true,

PersistentPreRun: func(cmd *cobra.Command, _ []string) {

initRootFlags(cmd)

},

PersistentPostRun: func(*cobra.Command, []string) {

teardown()

},

}

addRootFlags(cmd)

out := cmd.OutOrStdout()

cmd.AddCommand(

// chart commands

newCreateCmd(out),

newDependencyCmd(out),

newFetchCmd(out),

newInspectCmd(out),

newLintCmd(out),

newPackageCmd(out),

newRepoCmd(out),

newSearchCmd(out),

newServeCmd(out),

newVerifyCmd(out),

// release commands

addFlagsTLS(newDeleteCmd(nil, out)),

addFlagsTLS(newGetCmd(nil, out)),

addFlagsTLS(newHistoryCmd(nil, out)),

addFlagsTLS(newInstallCmd(nil, out)),

addFlagsTLS(newListCmd(nil, out)),

addFlagsTLS(newRollbackCmd(nil, out)),

addFlagsTLS(newStatusCmd(nil, out)),

addFlagsTLS(newUpgradeCmd(nil, out)),

addFlagsTLS(newReleaseTestCmd(nil, out)),

addFlagsTLS(newResetCmd(nil, out)),

addFlagsTLS(newVersionCmd(nil, out)),

newCompletionCmd(out),

newHomeCmd(out),

newInitCmd(out),

newPluginCmd(out),

// Hidden documentation generator command: 'helm docs'

newDocsCmd(out),

// Deprecated

markDeprecated(newRepoUpdateCmd(out), "use 'helm repo update'\n"),

)

// Find and add plugins

loadPlugins(cmd, out)

return cmd

}

新建一个cobra.Command对象作为根命令helm,配置说明信息和PreRun函数initRootFlags(cmd)和PostRun函数teardown(),前者是初始化默认参数和TLS相关文件的,后者是关闭和集群中pod通信的隧道的。

addRootFlags(cmd):定义helm命令的全局参数。

cmd.OutOrStdout()获取命令行输出句柄。

添加子命令,需要调用tiller的加上TLS相关文件参数。

install命令详解

func newInstallCmd(c helm.Interface, out io.Writer) *cobra.Command {

inst := &installCmd{

out: out,

client: c,

}

cmd := &cobra.Command{

Use: "install [CHART]",

Short: "install a chart archive",

Long: installDesc,

PreRunE: setupConnection,

RunE: func(cmd *cobra.Command, args []string) error {

if err := checkArgsLength(len(args), "chart name"); err != nil {

return err

}

debug("Original chart version: %q", inst.version)

if inst.version == "" && inst.devel {

debug("setting version to >0.0.0-a")

inst.version = ">0.0.0-a"

}

cp, err := locateChartPath(inst.repoURL, args[0], inst.version, inst.verify, inst.keyring,

inst.certFile, inst.keyFile, inst.caFile)

if err != nil {

return err

}

inst.chartPath = cp

inst.client = ensureHelmClient(inst.client)

return inst.run()

},

}

f := cmd.Flags()

f.VarP(&inst.valueFiles, "values", "f", "specify values in a YAML file (can specify multiple)")

f.StringVarP(&inst.name, "name", "n", "", "release name. If unspecified, it will autogenerate one for you")

f.StringVar(&inst.namespace, "namespace", "", "namespace to install the release into")

f.BoolVar(&inst.dryRun, "dry-run", false, "simulate an install")

f.BoolVar(&inst.disableHooks, "no-hooks", false, "prevent hooks from running during install")

f.BoolVar(&inst.replace, "replace", false, "re-use the given name, even if that name is already used. This is unsafe in production")

f.StringArrayVar(&inst.values, "set", []string{}, "set values on the command line (can specify multiple or separate values with commas: key1=val1,key2=val2)")

f.StringVar(&inst.nameTemplate, "name-template", "", "specify template used to name the release")

f.BoolVar(&inst.verify, "verify", false, "verify the package before installing it")

f.StringVar(&inst.keyring, "keyring", defaultKeyring(), "location of public keys used for verification")

f.StringVar(&inst.version, "version", "", "specify the exact chart version to install. If this is not specified, the latest version is installed")

f.Int64Var(&inst.timeout, "timeout", 300, "time in seconds to wait for any individual Kubernetes operation (like Jobs for hooks)")

f.BoolVar(&inst.wait, "wait", false, "if set, will wait until all Pods, PVCs, Services, and minimum number of Pods of a Deployment are in a ready state before marking the release as successful. It will wait for as long as --timeout")

f.StringVar(&inst.repoURL, "repo", "", "chart repository url where to locate the requested chart")

f.StringVar(&inst.certFile, "cert-file", "", "identify HTTPS client using this SSL certificate file")

f.StringVar(&inst.keyFile, "key-file", "", "identify HTTPS client using this SSL key file")

f.StringVar(&inst.caFile, "ca-file", "", "verify certificates of HTTPS-enabled servers using this CA bundle")

f.BoolVar(&inst.devel, "devel", false, "use development versions, too. Equivalent to version '>0.0.0-a'. If --version is set, this is ignored.")

return cmd

}

新建一个install对象inst。

新建一个cobra.Command对象install(作为helm的子命令),里面包括预执行函数setupConnection(),作用是在没有设置tiller地址的时候初始化一个kube client和一个连接tiller所在命名空间中的pod的隧道,目的就是建立tiller服务器的连接。还有一个执行函数RunE,具体怎么执行就从这个函数开始,这个函数的功能有验证install命令后带的参数个数是否合适,是否使用development版本,获取chart绝对路径,新建一个配置tiller服务器起的helm客户端,run(下面详细分析)。

定义install这个子命令的局部参数。

func (i *installCmd) run() error {

debug("CHART PATH: %s\n", i.chartPath)

if i.namespace == "" {

i.namespace = defaultNamespace()

}

rawVals, err := vals(i.valueFiles, i.values)

if err != nil {

return err

}

// If template is specified, try to run the template.

if i.nameTemplate != "" {

i.name, err = generateName(i.nameTemplate)

if err != nil {

return err

}

// Print the final name so the user knows what the final name of the release is.

fmt.Printf("FINAL NAME: %s\n", i.name)

}

// Check chart requirements to make sure all dependencies are present in /charts

chartRequested, err := chartutil.Load(i.chartPath)

if err != nil {

return prettyError(err)

}

if req, err := chartutil.LoadRequirements(chartRequested); err == nil {

// If checkDependencies returns an error, we have unfullfilled dependencies.

// As of Helm 2.4.0, this is treated as a stopping condition:

// https://github.com/kubernetes/helm/issues/2209

if err := checkDependencies(chartRequested, req); err != nil {

return prettyError(err)

}

} else if err != chartutil.ErrRequirementsNotFound {

return fmt.Errorf("cannot load requirements: %v", err)

}

res, err := i.client.InstallReleaseFromChart(

chartRequested,

i.namespace,

helm.ValueOverrides(rawVals),

helm.ReleaseName(i.name),

helm.InstallDryRun(i.dryRun),

helm.InstallReuseName(i.replace),

helm.InstallDisableHooks(i.disableHooks),

helm.InstallTimeout(i.timeout),

helm.InstallWait(i.wait))

if err != nil {

return prettyError(err)

}

rel := res.GetRelease()

if rel == nil {

return nil

}

i.printRelease(rel)

// If this is a dry run, we can't display status.

if i.dryRun {

return nil

}

// Print the status like status command does

status, err := i.client.ReleaseStatus(rel.Name)

if err != nil {

return prettyError(err)

}

PrintStatus(i.out, status)

return nil

}

如果没有命名空间就取默认命名空间。

获取所有vlauesFiles和values中的键值对。

如果有相应模板就获取模板(用了template包)。

验证chart包并加载到chart对象中。

检查是否存在requirement,如果存在所含的dependencies是否包含在chart的dependencies中。

执行install(下面详细分析)。

从response中获取Release并输出。

如果是模拟运行就直接返回。

调用ReleaseStatus接口查看现在安装的状态并输出。

// InstallReleaseFromChart installs a new chart and returns the release response.

func (h *Client) InstallReleaseFromChart(chart *chart.Chart, ns string, opts ...InstallOption) (*rls.InstallReleaseResponse, error) {

// apply the install options

for _, opt := range opts {

opt(&h.opts)

}

req := &h.opts.instReq

req.Chart = chart

req.Namespace = ns

req.DryRun = h.opts.dryRun

req.DisableHooks = h.opts.disableHooks

req.ReuseName = h.opts.reuseName

ctx := NewContext()

if h.opts.before != nil {

if err := h.opts.before(ctx, req); err != nil {

return nil, err

}

}

err := chartutil.ProcessRequirementsEnabled(req.Chart, req.Values)

if err != nil {

return nil, err

}

err = chartutil.ProcessRequirementsImportValues(req.Chart)

if err != nil {

return nil, err

}

return h.install(ctx, req)

}

配置helm client的参数。

新建一个install用的request,并将helm client的参数传入这个request。

如果client配置参数中有上下文需要处理就处理上下文。

移除dependencies中不需要的chart,如果requirement存在的话,去除dependencies中requirement中已经有的部分,然后基于chart中的value中的tag和value path筛选掉requirement的dependencies中不符合要求的chart。

整理chart的value值,先是递归找出要安装的这个chartA的dependencies中还含有dependencies的chartB(找出直连的所有非叶子节点),把这些chartB的dependencies即所有子chartC的requirement和自身的value添加到chartB中。

最后install。

// Executes tiller.InstallRelease RPC.

func (h *Client) install(ctx context.Context, req *rls.InstallReleaseRequest) (*rls.InstallReleaseResponse, error) {

c, err := h.connect(ctx)

if err != nil {

return nil, err

}

defer c.Close()

rlc := rls.NewReleaseServiceClient(c)

return rlc.InstallRelease(ctx, req)

}

同grpc连接tiller。

新建一个service客户端,远程调用install接口。

helm客户端分析到此结束。

tiller服务端

tiller服务器启动流程

const (

// tlsEnableEnvVar names the environment variable that enables TLS.

tlsEnableEnvVar = "TILLER_TLS_ENABLE"

// tlsVerifyEnvVar names the environment variable that enables

// TLS, as well as certificate verification of the remote.

tlsVerifyEnvVar = "TILLER_TLS_VERIFY"

// tlsCertsEnvVar names the environment variable that points to

// the directory where Tiller's TLS certificates are located.

tlsCertsEnvVar = "TILLER_TLS_CERTS"

storageMemory = "memory"

storageConfigMap = "configmap"

probeAddr = ":44135"

traceAddr = ":44136"

)

var (

grpcAddr = flag.String("listen", ":44134", "address:port to listen on")

enableTracing = flag.Bool("trace", false, "enable rpc tracing")

store = flag.String("storage", storageConfigMap, "storage driver to use. One of 'configmap' or 'memory'")

remoteReleaseModules = flag.Bool("experimental-release", false, "enable experimental release modules")

tlsEnable = flag.Bool("tls", tlsEnableEnvVarDefault(), "enable TLS")

tlsVerify = flag.Bool("tls-verify", tlsVerifyEnvVarDefault(), "enable TLS and verify remote certificate")

keyFile = flag.String("tls-key", tlsDefaultsFromEnv("tls-key"), "path to TLS private key file")

certFile = flag.String("tls-cert", tlsDefaultsFromEnv("tls-cert"), "path to TLS certificate file")

caCertFile = flag.String("tls-ca-cert", tlsDefaultsFromEnv("tls-ca-cert"), "trust certificates signed by this CA")

// rootServer is the root gRPC server.

//

// Each gRPC service registers itself to this server during init().

rootServer *grpc.Server

// env is the default environment.

//

// Any changes to env should be done before rootServer.Serve() is called.

env = environment.New()

logger *log.Logger

)

配置环境参数,需要TLS的从环境变量中获取相关文件,创建grpc server变量和程序内上下文环境,log。

func main() {

flag.Parse()

if *enableTracing {

log.SetFlags(log.Lshortfile)

}

logger = newLogger("main")

start()

}

解析参数,start。

func start() {

clientset, err := kube.New(nil).ClientSet()

if err != nil {

logger.Fatalf("Cannot initialize Kubernetes connection: %s", err)

}

switch *store {

case storageMemory:

env.Releases = storage.Init(driver.NewMemory())

case storageConfigMap:

cfgmaps := driver.NewConfigMaps(clientset.Core().ConfigMaps(namespace()))

cfgmaps.Log = newLogger("storage/driver").Printf

env.Releases = storage.Init(cfgmaps)

env.Releases.Log = newLogger("storage").Printf

}

kubeClient := kube.New(nil)

kubeClient.Log = newLogger("kube").Printf

env.KubeClient = kubeClient

if *tlsEnable || *tlsVerify {

opts := tlsutil.Options{CertFile: *certFile, KeyFile: *keyFile}

if *tlsVerify {

opts.CaCertFile = *caCertFile

}

}

var opts []grpc.ServerOption

if *tlsEnable || *tlsVerify {

cfg, err := tlsutil.ServerConfig(tlsOptions())

if err != nil {

logger.Fatalf("Could not create server TLS configuration: %v", err)

}

opts = append(opts, grpc.Creds(credentials.NewTLS(cfg)))

}

rootServer = tiller.NewServer(opts...)

lstn, err := net.Listen("tcp", *grpcAddr)

if err != nil {

logger.Fatalf("Server died: %s", err)

}

logger.Printf("Starting Tiller %s (tls=%t)", version.GetVersion(), *tlsEnable || *tlsVerify)

logger.Printf("GRPC listening on %s", *grpcAddr)

logger.Printf("Probes listening on %s", probeAddr)

logger.Printf("Storage driver is %s", env.Releases.Name())

if *enableTracing {

startTracing(traceAddr)

}

srvErrCh := make(chan error)

probeErrCh := make(chan error)

go func() {

svc := tiller.NewReleaseServer(env, clientset, *remoteReleaseModules)

svc.Log = newLogger("tiller").Printf

services.RegisterReleaseServiceServer(rootServer, svc)

if err := rootServer.Serve(lstn); err != nil {

srvErrCh <- err

}

}()

go func() {

mux := newProbesMux()

// Register gRPC server to prometheus to initialized matrix

goprom.Register(rootServer)

addPrometheusHandler(mux)

if err := http.ListenAndServe(probeAddr, mux); err != nil {

probeErrCh <- err

}

}()

select {

case err := <-srvErrCh:

logger.Fatalf("Server died: %s", err)

case err := <-probeErrCh:

logger.Printf("Probes server died: %s", err)

}

}

获取kubernetes内部kubectl内部所用的各种服务的client集合。

在上一步的基础上对其configmap接口进行封装作为tiller的存储driver。

新建一个调用kubernetes API的client,并放进env中。

如果设置了TLS就设置TLS的配置项。

创建grpc server作为tiller服务器。

开启系统tcp端口44135开始监听。

如果开启了trace就开启端口44136提供trace服务。

新建服务用的channel和监测用的channel。

(协程)新建一个提供服务的Release server,并把它注册到grpc server中,开始提供服务。

(协程)建立一个http服务器提供监控服务,用prometheus监控grpc服务器。

select通道阻塞。

// ReleaseServer implements the server-side gRPC endpoint for the HAPI services.

type ReleaseServer struct {

ReleaseModule

env *environment.Environment

clientset internalclientset.Interface

Log func(string, ...interface{})

}

// NewReleaseServer creates a new release server.

func NewReleaseServer(env *environment.Environment, clientset internalclientset.Interface, useRemote bool) *ReleaseServer {

var releaseModule ReleaseModule

if useRemote {

releaseModule = &RemoteReleaseModule{}

} else {

releaseModule = &LocalReleaseModule{

clientset: clientset,

}

}

return &ReleaseServer{

env: env,

clientset: clientset,

ReleaseModule: releaseModule,

Log: func(_ string, _ ...interface{}) {},

}

}

Release server主要包括了程序上下文环境env,kubernetes内的client集合,log函数,和最重要的Release资源访问接口ReleaseModule,这个默认实用本地的kubernetes client包,当然也可以选择grpc远程调用方式,即rudder。tiller服务端提供的所有服务都以Release server的方法的形式存在,下面具体分析Release install方法。

rudder的作用:由tiller中的remoteReleaseModules变量开启,用于远程操作,新建一个grpc client远程调用server端接口,以此作为tiller 底层接口。

install接口

// InstallRelease installs a release and stores the release record.

func (s *ReleaseServer) InstallRelease(c ctx.Context, req *services.InstallReleaseRequest) (*services.InstallReleaseResponse, error) {

s.Log("preparing install for %s", req.Name)

rel, err := s.prepareRelease(req)

if err != nil {

s.Log("failed install prepare step: %s", err)

res := &services.InstallReleaseResponse{Release: rel}

// On dry run, append the manifest contents to a failed release. This is

// a stop-gap until we can revisit an error backchannel post-2.0.

if req.DryRun && strings.HasPrefix(err.Error(), "YAML parse error") {

err = fmt.Errorf("%s\n%s", err, rel.Manifest)

}

return res, err

}

s.Log("performing install for %s", req.Name)

res, err := s.performRelease(rel, req)

if err != nil {

s.Log("failed install perform step: %s", err)

}

return res, err

} installRelease函数比较简单,先进行预处理prepareRelease,然后没问题再执行performRelease,最后返回response

// prepareRelease builds a release for an install operation.

func (s *ReleaseServer) prepareRelease(req *services.InstallReleaseRequest) (*release.Release, error) {

if req.Chart == nil {

return nil, errMissingChart

}

name, err := s.uniqName(req.Name, req.ReuseName)

if err != nil {

return nil, err

}

caps, err := capabilities(s.clientset.Discovery())

if err != nil {

return nil, err

}

revision := 1

ts := timeconv.Now()

options := chartutil.ReleaseOptions{

Name: name,

Time: ts,

Namespace: req.Namespace,

Revision: revision,

IsInstall: true,

}

valuesToRender, err := chartutil.ToRenderValuesCaps(req.Chart, req.Values, options, caps)

if err != nil {

return nil, err

}

hooks, manifestDoc, notesTxt, err := s.renderResources(req.Chart, valuesToRender, caps.APIVersions)

if err != nil {

// Return a release with partial data so that client can show debugging

// information.

rel := &release.Release{

Name: name,

Namespace: req.Namespace,

Chart: req.Chart,

Config: req.Values,

Info: &release.Info{

FirstDeployed: ts,

LastDeployed: ts,

Status: &release.Status{Code: release.Status_UNKNOWN},

Description: fmt.Sprintf("Install failed: %s", err),

},

Version: 0,

}

if manifestDoc != nil {

rel.Manifest = manifestDoc.String()

}

return rel, err

}

// Store a release.

rel := &release.Release{

Name: name,

Namespace: req.Namespace,

Chart: req.Chart,

Config: req.Values,

Info: &release.Info{

FirstDeployed: ts,

LastDeployed: ts,

Status: &release.Status{Code: release.Status_UNKNOWN},

Description: "Initial install underway", // Will be overwritten.

},

Manifest: manifestDoc.String(),

Hooks: hooks,

Version: int32(revision),

}

if len(notesTxt) > 0 {

rel.Info.Status.Notes = notesTxt

}

err = validateManifest(s.env.KubeClient, req.Namespace, manifestDoc.Bytes())

return rel, err

} 检查是否重名,如果没重或者与之前已经被删除的Release重了的话,就正常返回,否则报错。<br /> 获取所有支持的API、kubernetes、tiller的版本号。<br /> 配置Release需要的额外状态参数。<br /> 获取填模板所用的字典。<br /> 根据request和上面获取的字典和API版本号,验证确认API版本号,渲染模板文件内容,隔离NOTES.txt,为各个文件建立manifest和hook对象并按照installorder排序(renderResource函数的作用)。<br /> 根据上面的内容新建一个release对象。<br /> 验证提供文件内容的合法性。

// performRelease runs a release.

func (s *ReleaseServer) performRelease(r *release.Release, req *services.InstallReleaseRequest) (*services.InstallReleaseResponse, error) {

res := &services.InstallReleaseResponse{Release: r}

if req.DryRun {

s.Log("dry run for %s", r.Name)

res.Release.Info.Description = "Dry run complete"

return res, nil

}

// pre-install hooks

if !req.DisableHooks {

if err := s.execHook(r.Hooks, r.Name, r.Namespace, hooks.PreInstall, req.Timeout); err != nil {

return res, err

}

} else {

s.Log("install hooks disabled for %s", req.Name)

}

switch h, err := s.env.Releases.History(req.Name); {

// if this is a replace operation, append to the release history

case req.ReuseName && err == nil && len(h) >= 1:

s.Log("name reuse for %s requested, replacing release", req.Name)

// get latest release revision

relutil.Reverse(h, relutil.SortByRevision)

// old release

old := h[0]

// update old release status

old.Info.Status.Code = release.Status_SUPERSEDED

s.recordRelease(old, true)

// update new release with next revision number

// so as to append to the old release's history

r.Version = old.Version + 1

updateReq := &services.UpdateReleaseRequest{

Wait: req.Wait,

Recreate: false,

Timeout: req.Timeout,

}

if err := s.ReleaseModule.Update(old, r, updateReq, s.env); err != nil {

msg := fmt.Sprintf("Release replace %q failed: %s", r.Name, err)

s.Log("warning: %s", msg)

old.Info.Status.Code = release.Status_SUPERSEDED

r.Info.Status.Code = release.Status_FAILED

r.Info.Description = msg

s.recordRelease(old, true)

s.recordRelease(r, false)

return res, err

}

default:

// nothing to replace, create as normal

// regular manifests

if err := s.ReleaseModule.Create(r, req, s.env); err != nil {

msg := fmt.Sprintf("Release %q failed: %s", r.Name, err)

s.Log("warning: %s", msg)

r.Info.Status.Code = release.Status_FAILED

r.Info.Description = msg

s.recordRelease(r, false)

return res, fmt.Errorf("release %s failed: %s", r.Name, err)

}

}

// post-install hooks

if !req.DisableHooks {

if err := s.execHook(r.Hooks, r.Name, r.Namespace, hooks.PostInstall, req.Timeout); err != nil {

msg := fmt.Sprintf("Release %q failed post-install: %s", r.Name, err)

s.Log("warning: %s", msg)

r.Info.Status.Code = release.Status_FAILED

r.Info.Description = msg

s.recordRelease(r, false)

return res, err

}

}

r.Info.Status.Code = release.Status_DEPLOYED

r.Info.Description = "Install complete"

// This is a tricky case. The release has been created, but the result

// cannot be recorded. The truest thing to tell the user is that the

// release was created. However, the user will not be able to do anything

// further with this release.

//

// One possible strategy would be to do a timed retry to see if we can get

// this stored in the future.

s.recordRelease(r, false)

return res, nil

}

新建一个response。

如果是dry run就返回。

如果没有关闭hook,先执行pre-install的hook。具体流程(execHook):从hook数组中根据event找出pre-install的hook,按hook自身的权重weight排序,将排好序的hook依次用kube client去创建资源,然后进行观察,直到ready状态再返回(ready表示已经被成功创建,并不是意味着已经创建好了)。

判断release的名字是否是已经用过的,若是就记录下来并更新版本号然后执行update;若不是就去创建资源。

如果没有关闭hook,就去执行post-install的hook。

回复response。